介绍Intel Omni-Path架构

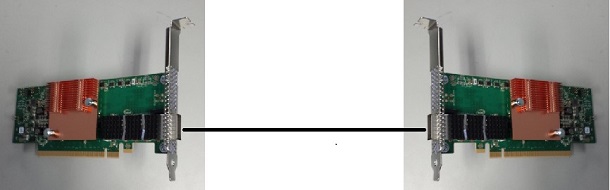

英特尔OPA是最新一代英特尔高性能网络交换结构技术。它增加了增强高性能计算性能、可伸缩性和服务质量的新功能。Intel OPA组件包括Intel OP HFI,它提供了网络交换结构、连接大量可伸缩端点的交换机、铜线和光缆,以及一个可识别所有节点、提供集中管理和监控的fabric Manager (FM)。Intel OP HFI是Intel OPA接口卡,提供主机到交换机的连接。Intel OP HFI还可以直接连接到另一个HFI(背对背连接)。

本文主要讨论如何安装Intel OP HFI,配置IP over Fabric,以及如何使用预构建的程序测试Fabric。本例中使用了两个系统,每个系统都配备了Intel®Xeon®E5处理器。两个系统都运行Red Hat Enterprise Linux* 7.2,并配备了通过千兆以太网路由器连接的千兆以太网适配器。

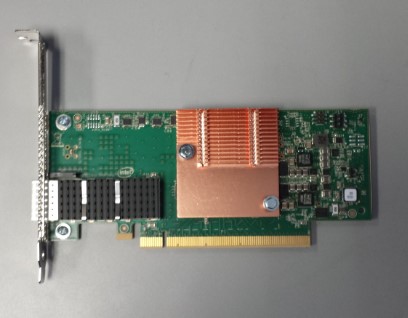

Intel Omni-Path主机网络交换卡

Intel OP HFI是一种标准的PCIe*卡,它与路由器或其他HFI接口相连。Intel OP HFI目前有两种型号:支持100Gbps的PCIe x16和支持56Gbps的PCIe x8。它被设计为低延迟/高带宽,可以配置0到8个虚拟通道外加一个管理通道。MTU大小可以配置为2、4、6、8或10 KB。

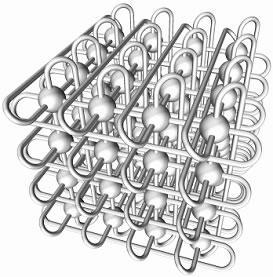

下图所示的照片就是OP HFI PCIe x16接口的网卡:

硬件安装

使用两台运行redhat 7.2操作系统,搭载intel E5至强处理器服务器。每台均配备了千兆以太网卡,并通过路由器相连。IP地址分别为10.23.3.27和10.23.3.148。我们将在每台服务器安装Intel OP HFI PCIe x16网卡,并通过Intel OPA线缆进行背对背连接。

首先关闭机器,安装Intel OP HFI网卡,通过Intel OPA 线缆连接网卡,并开机。确认Intel OP HFI上的绿灯正常亮起。这表明Intel OP HFI链状态已被激活。

接下来,确认系统能通过lspci命令检测到Intel OP HFI

1

2

3

4# lspci -vv | grep Omni

18:00.0 Fabric controller: Intel Corporation Omni-Path HFI Silicon 100 Series [discrete] (rev 11)

Subsystem: Intel Corporation Omni-Path HFI Silicon 100 Series [discrete]第一个字段18:00.0表示PCI 插槽号,第二个字段表示插槽名称“fabric controller”,最后一个字段表示Intel OP HFI的设备名称。

为了确认Intel OP HFI的网卡速度,输入“lspci -vv”输出更详细的信息并搜索先前的插槽号(18:00.0):

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37# lspci -vv

...................................................

18:00.0 Fabric controller: Intel Corporation Omni-Path HFI Silicon 100 Series [discrete] (rev 11)

Subsystem: Intel Corporation Omni-Path HFI Silicon 100 Series [discrete]

Control: I/O- Mem+ BusMaster+ SpecCycle- MemWINV- VGASnoop- ParErr+ Stepping- SERR+ FastB2B- DisINTx+

Status: Cap+ 66MHz- UDF- FastB2B- ParErr- DEVSEL=fast >TAbort- <TAbort- <MAbort- >SERR- <PERR- INTx-

Latency: 0

Interrupt: pin A routed to IRQ 38

NUMA node: 0

Region 0: Memory at a0000000 (64-bit, non-prefetchable) [size=64M]

Expansion ROM at <ignored> [disabled]

Capabilities: [40] Power Management version 3

Flags: PMEClk- DSI- D1- D2- AuxCurrent=0mA PME(D0+,D1-,D2-,D3hot-,D3cold-)

Status: D0 NoSoftRst+ PME-Enable- DSel=0 DScale=0 PME-

Capabilities: [70] Express (v2) Endpoint, MSI 00

DevCap: MaxPayload 256 bytes, PhantFunc 0, Latency L0s unlimited, L1 <8us

ExtTag+ AttnBtn- AttnInd- PwrInd- RBE+ FLReset+ SlotPowerLimit 0.000W

DevCtl: Report errors: Correctable+ Non-Fatal+ Fatal+ Unsupported+

RlxdOrd+ ExtTag+ PhantFunc- AuxPwr- NoSnoop+ FLReset-

MaxPayload 256 bytes, MaxReadReq 512 bytes

DevSta: CorrErr- UncorrErr- FatalErr- UnsuppReq- AuxPwr- TransPend-

LnkCap: Port #0, Speed 8GT/s, Width x16, ASPM L1, Exit Latency L0s <4us, L1 <64us

ClockPM- Surprise- LLActRep- BwNot- ASPMOptComp+

LnkCtl: ASPM Disabled; RCB 64 bytes Disabled- CommClk+

ExtSynch- ClockPM- AutWidDis- BWInt- AutBWInt-

LnkSta: Speed 8GT/s, Width x16, TrErr- Train- SlotClk+ DLActive- BWMgmt- ABWMgmt-

DevCap2: Completion Timeout: Range ABCD, TimeoutDis+, LTR-, OBFF Not Supported

DevCtl2: Completion Timeout: 50us to 50ms, TimeoutDis-, LTR-, OBFF Disabled

LnkCtl2: Target Link Speed: 8GT/s, EnterCompliance- SpeedDis-

Transmit Margin: Normal Operating Range, EnterModifiedCompliance- ComplianceSOS-

Compliance De-emphasis: -6dB

LnkSta2: Current De-emphasis Level: -3.5dB, EqualizationComplete+, EqualizationPhase1+

EqualizationPhase2+, EqualizationPhase3+, LinkEqualizationRequest-

...................................................LinkCap和LinkStat表明速度是8GT/s,宽度 x16。使用的是Gen3 PCIe插槽。8GT/s是Intel OP HFI所能达到的理论带宽。

主机软件安装

Intel Omni-Path 网络交换器主机软件有两种主要版本:

- 基本包:通常装在计算节点上,下载地址。

- Intel®Omi-Path Fabric Suite (IFS)是基本包的超集,下载地址,通常安装在head/management节点上,该节点还包含以下包:

- FastFabric:用于安装、测试和监视fabric的实用程序的集合

- Intel Omni-Path Fabric 管理器

- FMGUI 是Intel’s Fabric图形管理界面

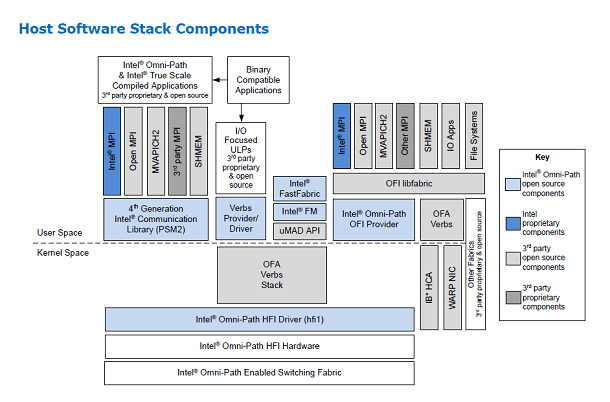

每个Fabric至少需要一个FM;但是,如果多个FMs共存,则只有一个FM是主FM,其他FM是备用FMs。FM标识所有节点、交换机和路由器;它分配本地id,使用路由表维护所有节点,并自动扫描更改和重编程。建议主机内存必须为计算节点保留500 MB,每个FM实例保留1 GB。下图显示了Fabric主机软件栈的所有组件(来源:“Intel®Omni-Path Fabric主机软件用户指南,Rev. 5.0”)。

在安装主机软件之前,您需要安装所需的依赖OS rpm。请参阅“Intel® Omni-Path Fabric软件安装指南(Rev 5.0)”文件中1.1.1.1 OS RPMs安装先决条件。

接下来我们在两台机器都安装IFS package,解压软件包,在每台机器运行相同的安装脚本。

1

2

3

4

5

6

7

8

9

10

11# tar -xvf IntelOPA-IFS.RHEL72-x86_64.10.3.0.0.81.tgz

# cd IntelOPA-IFS.RHEL72-x86_64.10.3.0.0.81/

# ./INSTALL -a

Installing All OPA Software

Determining what is installed on system...

-------------------------------------------------------------------------------

Preparing OFA 10_3_0_0_82 release for Install...

...

A System Reboot is recommended to activate the software changes

Done Installing OPA Software.

Rebuilding boot image with "/usr/bin/dracut -f"...done.然后重启机器

1

# reboot

机器重启后,加载Intel OP HFI驱动,运行lsmod,检查Intel OPA 模块:

1

2

3

4

5

6

7

8# modprobe hfi1

# lsmod | grep hfi1

hfi1 633634 1

rdmavt 57992 1 hfi1

ib_mad 51913 5 hfi1,ib_cm,ib_sa,rdmavt,ib_umad

ib_core 98787 14 hfi1,rdma_cm,ib_cm,ib_sa,iw_cm,xprtrdma,ib_mad,ib_ucm,rdmavt,ib_iser,ib_umad,ib_uverbs,ib_ipoib,ib_isert

i2c_algo_bit 13413 2 ast,hfi1

i2c_core 40582 6 ast,drm,hfi1,ipmi_ssif,drm_kms_helper,i2c_algo_bit对于安装错误信息,可以参考:

/var/log/opa.log文件。

后安装

为了配置 IP over Fabric通过Intel OPA software,再一次运行脚本:

1

# ./INSTALL

选择配置选项 2) Reconfigure OFA IP over IB。本例中我们配置的ip(IPOFabric)为192.168.100.101。你可以确认下IP over Fabric 的接口:

1

2

3

4

5

6

7

8

9

10

11# more /etc/sysconfig/network-scripts/ifcfg-ib0

DEVICE=ib0

BOOTPROTO=static

IPADDR=192.168.100.101

BROADCAST=192.168.100.255

NETWORK=192.168.100.0

NETMASK=255.255.255.0

ONBOOT=yes

NM_CONTROLLED=no

CONNECTED_MODE=yes

MTU=65520启动IP over Fabric网卡,并确认IP地址:

1

2

3

4

5

6

7

8

9

10

11# ifup ib0

# ifconfig ib0

ib0: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 65520

inet 192.168.100.101 netmask 255.255.255.0 broadcast 192.168.100.255

inet6 fe80::211:7501:179:311 prefixlen 64 scopeid 0x20<link>

Infiniband hardware address can be incorrect! Please read BUGS section in ifconfig(8).

infiniband 80:00:00:02:FE:80:00:00:00:00:00:00:00:00:00:00:00:00:00:00 txqueuelen 256 (InfiniBand)

RX packets 0 bytes 0 (0.0 B)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 16 bytes 2888 (2.8 KiB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0我们可以从/var/log/messages抓取系统日志信息,从/var/log/opa.log抓取OPA相关的日志信息。

到目前为止,我们已经在主机(10.23.3.27)安装了相关软件。同理我们重复上面的步骤配置第二台机器(10.23.3.148),在这台机器上配置IP over Fabric(192.168.100.102)。确认可以从第一台服务器ping通192.168.100.102:

1

2

3

4

5

6# ping 192.168.100.102

PING 192.168.100.102 (192.168.100.102) 56(84) bytes of data.

64 bytes from 192.168.100.102: icmp_seq=1 ttl=64 time=1.34 ms

64 bytes from 192.168.100.102: icmp_seq=2 ttl=64 time=0.303 ms

64 bytes from 192.168.100.102: icmp_seq=3 ttl=64 time=0.253 ms

^Copainfo命令可以确认fabric:

1

2

3

4

5

6

7

8

9

10# opainfo

hfi1_0:1 PortGUID:0x0011750101790311

PortState: Init (LinkUp)

LinkSpeed Act: 25Gb En: 25Gb

LinkWidth Act: 4 En: 4

LinkWidthDnGrd ActTx: 4 Rx: 4 En: 1,2,3,4

LCRC Act: 14-bit En: 14-bit,16-bit,48-bit

Xmit Data: 0 MB Pkts: 0

Recv Data: 0 MB Pkts: 0

Link Quality: 5 (Excellent)可知Intel OP HFI的速度为100 Gb(25Gb x4)

接下来,您需要允许Intel OPA Fabric Manager在一台主机上运行并启动Intel OPA Fabric Manager。请注意,FM必须经历许多步骤,包括物理子网建立、子网发现、信息收集、LID分配、路径建立、端口配置、交换机配置和子网激活。

1

2

3# opaconfig –E opafm

# service opafm start

Redirecting to /bin/systemctl start opafm.service你可以随时查询OPA Fabric管理器的状态:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22# service opafm status

Redirecting to /bin/systemctl status opafm.service

● opafm.service - OPA Fabric Manager

Loaded: loaded (/usr/lib/systemd/system/opafm.service; enabled; vendor preset: disabled)

Active: active (running) since Mon 2017-01-09 23:42:41 EST; 21min ago

Process: 6758 ExecStart=/usr/lib/opa-fm/bin/opafmd -D (code=exited, status=0/SUCCESS)

Main PID: 6759 (opafmd)

CGroup: /system.slice/opafm.service

├─6759 /usr/lib/opa-fm/bin/opafmd -D

└─6760 /usr/lib/opa-fm/runtime/sm -e sm_0

# opainfo

hfi1_0:1 PortGID:0xfe80000000000000:0011750101790311

PortState: Active

LinkSpeed Act: 25Gb En: 25Gb

LinkWidth Act: 4 En: 4

LinkWidthDnGrd ActTx: 4 Rx: 4 En: 3,4

LCRC Act: 14-bit En: 14-bit,16-bit,48-bit Mgmt: True

LID: 0x00000001-0x00000001 SM LID: 0x00000001 SL: 0

Xmit Data: 0 MB Pkts: 21

Recv Data: 0 MB Pkts: 22

Link Quality: 5 (Excellent)端口状态是“活动的”,表示一个全功能链路处于正常工作状态。要显示Intel OP HFI端口信息并监控链路质量,请运行opaportinfo命令:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52# opaportinfo

Present Port State:

Port 1 Info

Subnet: 0xfe80000000000000 GUID: 0x0011750101790311

LocalPort: 1 PortState: Active

PhysicalState: LinkUp

OfflineDisabledReason: None

IsSMConfigurationStarted: True NeighborNormal: True

BaseLID: 0x00000001 SMLID: 0x00000001

LMC: 0 SMSL: 0

PortType: Unknown LimtRsp/Subnet: 32 us, 536 ms

M_KEY: 0x0000000000000000 Lease: 0 s Protect: Read-only

LinkWidth Act: 4 En: 4 Sup: 1,2,3,4

LinkWidthDnGrd ActTx: 4 Rx: 4 En: 3,4 Sup: 1,2,3,4

LinkSpeed Act: 25Gb En: 25Gb Sup: 25Gb

PortLinkMode Act: STL En: STL Sup: STL

PortLTPCRCMode Act: 14-bit En: 14-bit,16-bit,48-bit Sup: 14-bit,16-bit,48-bit

NeighborMode MgmtAllowed: No FWAuthBypass: Off NeighborNodeType: HFI

NeighborNodeGuid: 0x00117501017444e0 NeighborPortNum: 1

Capability: 0x00410022: CN CM APM SM

Capability3: 0x0008: SS

SM_TrapQP: 0x0 SA_QP: 0x1

IPAddr IPV6/IPAddr IPv4: ::/0.0.0.0

VLs Active: 8+1

VL: Cap 8+1 HighLimit 0x0000 PreemptLimit 0x0000

VLFlowControlDisabledMask: 0x00000000 ArbHighCap: 16 ArbLowCap: 16

MulticastMask: 0x0 CollectiveMask: 0x0

P_Key Enforcement: In: Off Out: Off

MulticastPKeyTrapSuppressionEnabled: 0 ClientReregister 0

PortMode ActiveOptimize: Off PassThru: Off VLMarker: Off 16BTrapQuery: Off

FlitCtrlInterleave Distance Max: 1 Enabled: 1

MaxNestLevelTxEnabled: 0 MaxNestLevelRxSupported: 0

FlitCtrlPreemption MinInitial: 0x0000 MinTail: 0x0000 LargePktLim: 0x00

SmallPktLimit: 0x00 MaxSmallPktLimit 0x00 PreemptionLimit: 0x00

PortErrorActions: 0x172000: CE-UVLMCE-BCDCE-BTDCE-BHDR-BVLM

BufferUnits:VL15Init 0x0110 VL15CreditRate 0x00 CreditAck 0x0 BufferAlloc 0x3

MTU Supported: (0x6) 8192 bytes

MTU Active By VL:

00: 8192 01: 0 02: 0 03: 0 04: 0 05: 0 06: 0 07: 0

08: 0 09: 0 10: 0 11: 0 12: 0 13: 0 14: 0 15: 2048

16: 0 17: 0 18: 0 19: 0 20: 0 21: 0 22: 0 23: 0

24: 0 25: 0 26: 0 27: 0 28: 0 29: 0 30: 0 31: 0

StallCnt/VL: 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0

HOQLife VL[00,07]: 0x00 0x00 0x00 0x00 0x00 0x00 0x00 0x00

HOQLife VL[08,15]: 0x00 0x00 0x00 0x00 0x00 0x00 0x00 0x00

HOQLife VL[16,23]: 0x00 0x00 0x00 0x00 0x00 0x00 0x00 0x00

HOQLife VL[24,31]: 0x00 0x00 0x00 0x00 0x00 0x00 0x00 0x00

ReplayDepth Buffer 0x80; Wire 0x0c

DiagCode: 0x0000 LedEnabled: Off

LinkDownReason: None NeighborLinkDownReason: None

OverallBufferSpace: 0x0880

Violations M_Key: 0 P_Key: 0 Q_Key: 0查询Intel OP HFI的信息:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23# hfi1_control -i

Driver Version: 0.9-294

Driver SrcVersion: A08826F35C95E0E8A4D949D

Opa Version: 10.3.0.0.81

0: BoardId: Intel Omni-Path Host Fabric Interface Adapter 100 Series

0: Version: ChipABI 3.0, ChipRev 7.17, SW Compat 3

0: ChipSerial: 0x00790311

0,1: Status: 5: LinkUp 4: ACTIVE

0,1: LID=0x1 GUID=0011:7501:0179:0311

# systemctl stop firewalld

# systemctl status firewalld

● firewalld.service - firewalld - dynamic firewall daemon

Loaded: loaded (/usr/lib/systemd/system/firewalld.service; enabled; vendor preset: enabled)

Active: inactive (dead) since Thu 2017-01-12 21:35:18 EST; 1s ago

Process: 137597 ExecStart=/usr/sbin/firewalld --nofork --nopid $FIREWALLD_ARGS (code=exited, status=0/SUCCESS)

Main PID: 137597 (code=exited, status=0/SUCCESS)

Jan 12 21:34:13 ebi2s28c01.jf.intel.com firewalld[137597]: 2017-01-12 21:34:1...

Jan 12 21:35:17 ebi2s28c01.jf.intel.com systemd[1]: Stopping firewalld - dyna...

Jan 12 21:35:18 ebi2s28c01.jf.intel.com systemd[1]: Stopped firewalld - dynam...

Hint: Some lines were ellipsized, use -l to show in full.运行Intel MPI Benchmarks程序

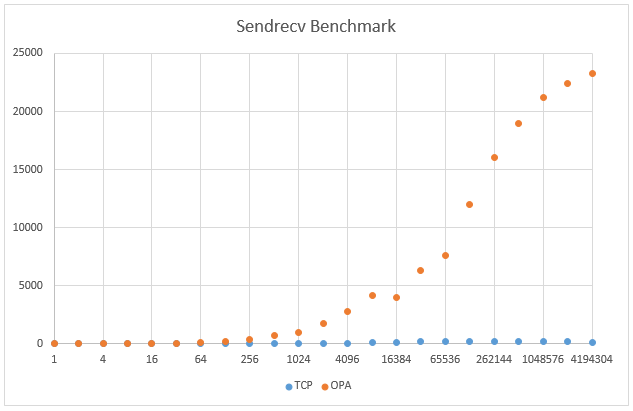

在本节中,我们使用一个基准程序来观察IP over Fabric的性能。请注意,这些数字仅供参考和说明之用,因为测试是在预生产服务器上运行的。

在这两个系统上,Intel®Parallel Studio 2017 Update 1都已安装。首先,我们使用TCP协议在服务器之间运行Sendrecv基准测试。Sendrecv是Intel®MPI基准IMB-MPI1套件中的一个并行传输基准,该工具可从Intel Parallel Studio获取。要在这个基准测试中使用TCP协议,用户可以指定“-genv i_mpi_fabric shm: TCP”:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65[root@ebi2s28c01 ~]# mpirun -genv I_MPI_FABRICS shm:tcp -host ebi2s28c01 -n 1 /opt/intel/impi/2017.1.132/bin64/IMB-MPI1 Sendrecv : -host ebi2s28c02 -n 1 /opt/intel/impi/2017.1.132/bin64/IMB-MPI1

Source Parallel Studio

Intel(R) Parallel Studio XE 2017 Update 1 for Linux*

Copyright (C) 2009-2016 Intel Corporation. All rights reserved.

#------------------------------------------------------------

# Intel (R) MPI Benchmarks 2017, MPI-1 part

#------------------------------------------------------------

# Date : Fri Jan 13 21:02:36 2017

# Machine : x86_64

# System : Linux

# Release : 3.10.0-327.el7.x86_64

# Version : #1 SMP Thu Oct 29 17:29:29 EDT 2015

# MPI Version : 3.1

# MPI Thread Environment:

# Calling sequence was:

# /opt/intel/impi/2017.1.132/bin64/IMB-MPI1 Sendrecv

# Minimum message length in bytes: 0

# Maximum message length in bytes: 4194304

#

# MPI_Datatype : MPI_BYTE

# MPI_Datatype for reductions : MPI_FLOAT

# MPI_Op : MPI_SUM

#

#

# List of Benchmarks to run:

# Sendrecv

#-----------------------------------------------------------------------------

# Benchmarking Sendrecv

# #processes = 2

#-----------------------------------------------------------------------------

#bytes #repetitions t_min[usec] t_max[usec] t_avg[usec] Mbytes/sec

0 1000 63.55 63.57 63.56 0.00

1 1000 62.30 62.32 62.31 0.03

2 1000 53.16 53.17 53.16 0.08

4 1000 69.16 69.16 69.16 0.12

8 1000 63.27 63.27 63.27 0.25

16 1000 62.46 62.47 62.46 0.51

32 1000 57.75 57.76 57.76 1.11

64 1000 62.57 62.60 62.58 2.04

128 1000 45.21 45.23 45.22 5.66

256 1000 45.04 45.08 45.06 11.36

512 1000 50.28 50.28 50.28 20.37

1024 1000 60.76 60.78 60.77 33.69

2048 1000 81.36 81.38 81.37 50.33

4096 1000 121.30 121.37 121.33 67.50

8192 1000 140.51 140.63 140.57 116.50

16384 1000 232.06 232.14 232.10 141.16

32768 1000 373.63 373.74 373.69 175.35

65536 640 799.55 799.92 799.74 163.86

131072 320 1473.76 1474.09 1473.92 177.83

262144 160 2806.43 2808.14 2807.28 186.70

524288 80 6031.64 6033.80 6032.72 173.78

1048576 40 9327.35 9330.27 9328.81 224.77

2097152 20 19665.44 19818.81 19742.13 211.63

4194304 10 50839.90 52294.80 51567.35 160.41

# All processes entering MPI_Finalize接下来编辑/etc/hosts文件,给IP over Fabric添加一个别名(192.168.100.101,192.168.100.102)。

1

2

3

4

5

6

7# cat /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

10.23.3.27 ebi2s28c01

10.23.3.148 ebi2s28c02

192.168.100.101 ebi2s28c01-opa

192.168.100.102 ebi2s28c02-opa要使用Intel OPA,用户可以指定“-genv i_mpi_fabric shm:tmi -genv I_MPI_TMI_PROVIDER psm2”或“-PSM2”。请注意,PSM2代表Intel®Performance Scaled Messaging 2,这是一种高性能协议,它为Intel®Omni-Path系列产品提供了一个底层的通信接口。通过使用Intel OPA,性能显著提高:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65[root@ebi2s28c01 ~]# mpirun -PSM2 -host ebi2s28c01-opa -n 1 /opt/intel/impi/2017.1.132/bin64/IMB-MPI1 Sendrecv : -host ebi2s28c02-opa -n 1 /opt/intel/impi/2017.1.132/bin64/IMB-MPI1

Source Parallel Studio

Intel(R) Parallel Studio XE 2017 Update 1 for Linux*

Copyright (C) 2009-2016 Intel Corporation. All rights reserved.

#------------------------------------------------------------

# Intel (R) MPI Benchmarks 2017, MPI-1 part

#------------------------------------------------------------

# Date : Fri Jan 27 22:31:23 2017

# Machine : x86_64

# System : Linux

# Release : 3.10.0-327.el7.x86_64

# Version : #1 SMP Thu Oct 29 17:29:29 EDT 2015

# MPI Version : 3.1

# MPI Thread Environment:

# Calling sequence was:

# /opt/intel/impi/2017.1.132/bin64/IMB-MPI1 Sendrecv

# Minimum message length in bytes: 0

# Maximum message length in bytes: 4194304

#

# MPI_Datatype : MPI_BYTE

# MPI_Datatype for reductions : MPI_FLOAT

# MPI_Op : MPI_SUM

#

#

# List of Benchmarks to run:

# Sendrecv

#-----------------------------------------------------------------------------

# Benchmarking Sendrecv

# #processes = 2

#-----------------------------------------------------------------------------

#bytes #repetitions t_min[usec] t_max[usec] t_avg[usec] Mbytes/sec

0 1000 1.09 1.09 1.09 0.00

1 1000 1.05 1.05 1.05 1.90

2 1000 1.07 1.07 1.07 3.74

4 1000 1.08 1.08 1.08 7.43

8 1000 1.02 1.02 1.02 15.62

16 1000 1.24 1.24 1.24 25.74

32 1000 1.21 1.22 1.22 52.63

64 1000 1.26 1.26 1.26 101.20

128 1000 1.20 1.20 1.20 213.13

256 1000 1.31 1.31 1.31 392.31

512 1000 1.36 1.36 1.36 754.03

1024 1000 2.16 2.16 2.16 948.54

2048 1000 2.38 2.38 2.38 1720.91

4096 1000 2.92 2.92 2.92 2805.56

8192 1000 3.90 3.90 3.90 4200.97

16384 1000 8.17 8.17 8.17 4008.37

32768 1000 10.44 10.44 10.44 6278.62

65536 640 17.15 17.15 17.15 7641.97

131072 320 21.90 21.90 21.90 11970.32

262144 160 32.62 32.62 32.62 16070.33

524288 80 55.30 55.30 55.30 18961.18

1048576 40 99.05 99.05 99.05 21172.45

2097152 20 187.19 187.30 187.25 22393.31

4194304 10 360.39 360.39 360.39 23276.25

# All processes entering MPI_Finalize下图总结了使用TCP和Intel OPA运行基准测试时的结果。x轴表示消息长度(以字节为单位),y轴表示吞吐量(Mbytes/sec):

总结

本文档展示了如何在两个系统上安装Intel OP HFI卡,并使用Intel OPA线缆背对背地连接主机。然后在主机上安装Intel Omni-Path Fabric 主机软件。详细描述了所有配置和验证步骤并启动必要的服务。最后,运行了一个简单的Intel MPI基准测试,以说明使用Intel OPA的好处。

配置管理节点优先级

编辑/etc/opa-fm/opafm.xml文件

选择

0 的值,将0改为你需要的数字(数字越大优先级越高)保存文件

重启服务

1

# systemctl restart opafm

当主服务器出现故障,备用服务器接管,主服务恢复,重新接管。

编辑/etc/opa-fm/opafm.xml文件

选择

0</Elevated Priority>的值,将0改为你需要的数字(数字越大优先级越高) 保存文件

重启服务

1

# systemctl restart opafm

一般elevated priority(一般配置比所有管理节点的普通优先级都要高)配合sticky failover一起使用,当主服务器宕机,备用服务器接管后,即使主服务器恢复,也不会再重新接管,以减少对网络造成的扰动。

例如:两台管理节点,一台管理节点的普通priority配置为8,另一台管理节点的普通priority配置为1,两台管理节点的elevated priority都配置为15,开启sticky failover,当备用节点接管主节点后,即使主节点恢复,也不会接管备用节点。

Yao L

探索永无止境

设计infiniband 2d/3d torus结构

搭建一个高性能存储系统Lustre篇

tag:

- CAE

- benchmark

- OPENLDAP

- Centos7.6

- LSF

- Linux

- PAC

- Arch

- Config

- Tools

- 网络虚拟化

- SLURM

- Munge

- vps

- shadowsocks

- iptables

- nat

- centos

- FileSystem

- kvm

- network

- bridge

- lustre

- mds

- mgt

- ost

- netdata

- influxdb

- grafana

- Abaqus

- torque

- maui

- scheduler

- xcat

- Murderp2p

- pxe

- ESXI

- IB

- Virtual

- PXE

- DHCP

- kickstart

- infiniband

- OPA

- Cisco

- CLI

- perf

- linux

- Lustre

- architecture

- 2d torus

- 3d torus

- Fat-Tree

- 全线速

- 阻塞

缺失模块。

1、请确保node版本大于6.2

2、在博客根目录(注意不是yilia根目录)执行以下命令:

npm i hexo-generator-json-content --save

3、在根目录_config.yml里添加配置:

jsonContent:

meta: false

pages: false

posts:

title: true

date: true

path: true

text: false

raw: false

content: false

slug: false

updated: false

comments: false

link: false

permalink: false

excerpt: false

categories: false

tags: true

硕士毕业于华中科技大学<br><br>目前主要从事高性能计算的工作<br><br>谢谢大家